# ignore this line

%load_ext pretty_jupyter

The pretty_jupyter extension is already loaded. To reload it, use: %reload_ext pretty_jupyter

Introduction - Data Organisation¶

This walkthrough is part of the ECLR page.

Here we will demonstrate how to organise, concatenate and merge data from different datasets into one dataset. In this walkthrough we will demonstrate how to do this in Python. In what follows we will download two different files with country level information. One will be a file with inflation data from the OECD. The other w

Preparing your workspace¶

As usual we begin a notebook or scriptfile by loading the libraries we anticipate to use.

# Importing the os module

import os

import pandas as pd

import numpy as np

from lets_plot import *

# Lets_plot requires a setup

LetsPlot.setup_html()

# Set your working directory

# os.chdir("C:/Pycode") # Replace with your drive and path

File upload¶

File 1 - OECD inflation data¶

The first file we will upload is a file from the OECD database. From that link you will have access to download an EXCEL file with inflation data ("Inflation-data.xlsx"). Save that in your working folder (or as here in a sub-folder "data") and load it. Have a look at the file you download from there. There are a number of different sheets for different types of inflation and different frequencies. We shall look at the annual Headline consumer price inflation which are saved on the "hcpi_a" worksheet.

data_file = "../data/Inflation-data.xlsx"

inf_data = pd.read_excel(data_file, sheet_name = "hcpi_a")

inf_data.head()

| Country Code | IMF Country Code | Country | Indicator Type | Series Name | 1970 | 1971 | 1972 | 1973 | 1974 | ... | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | Note | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | AFG | 512.0 | Afghanistan | Inflation | Headline Consumer Price Inflation | 25.51 | 25.51000 | -12.52000 | -10.680000 | 10.23000 | ... | -0.660000 | 4.380000 | 4.976 | 0.63 | 2.302 | 5.443000 | 5.062000 | 10.600000 | NaN | Annual average inflation |

| 1 | ALB | 914.0 | Albania | Inflation | Headline Consumer Price Inflation | NaN | NaN | NaN | NaN | NaN | ... | 1.910179 | 1.291234 | 1.987 | 2.03 | 1.411 | 1.620887 | 2.041472 | 6.725203 | 5.565224 | Annual average inflation |

| 2 | DZA | 612.0 | Algeria | Inflation | Headline Consumer Price Inflation | 6.60 | 2.62664 | 3.65631 | 6.172840 | 4.69961 | ... | 4.391666 | 5.800304 | 5.591 | 3.51 | 1.952 | 2.415131 | 7.226063 | 9.265516 | 9.322174 | Annual average inflation |

| 3 | AGO | 614.0 | Angola | Inflation | Headline Consumer Price Inflation | 7.97 | 5.78000 | 15.80000 | 15.670000 | 27.42000 | ... | 9.159000 | 32.377731 | 29.844 | 19.63 | 17.079 | 21.024000 | 23.846111 | 23.826819 | 13.644102 | Annual average inflation |

| 4 | ATG | 311.0 | Antigua and Barbuda | Inflation | Headline Consumer Price Inflation | 8.87 | 8.63000 | 12.67000 | 21.719999 | 12.97000 | ... | 0.969000 | -0.489000 | 2.425 | 1.21 | 1.475 | 1.058000 | 1.627000 | 7.531078 | 5.067139 | Annual average inflation |

5 rows × 60 columns

As you can see, each line corresponds to one country and then columns represent years. This is how you will often files for macro data presented, but it is not a good format to work with time series data. We will want the data with each row representing one country and one year of data. We will do that below. But before proceeding we will remove a number of variables we will not be using further.

inf_data = inf_data.drop(['IMF Country Code','Series Name', 'Note'], axis = 1)

# attempt to run the code without "axis = 1". You will get an error message

# axis = 1 tells python to look for column names to drop

File 2 - ECB bank interest rates¶

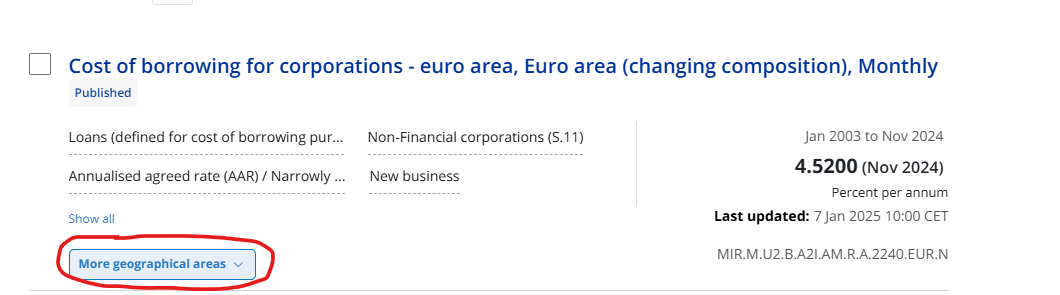

The second file we will look at is a set of European interest rates. On this occasion we willbe looling at corporate borrowing rates offered by banks across countries in the European Union. Such data are available through the European Central Bank database. This link leads you straight to the page from which you can access the data. You should see the following

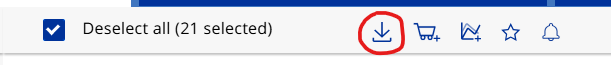

Click on the "more geographic areas" button and then "Select all" which ensures that you get the interest rates at country level. Once you have done that click the download button

You will then be given a choice of dataformats and you should chose "XLS long" as this produces one row of data for each country-period. The data are stored in data sheet "DATA(MIR)".

data_file = "../data/ECB_interestrates.xlsx"

int_data = pd.read_excel(data_file, sheet_name = "DATA(MIR)")

int_data.head()

| DATE | TIME PERIOD | OBS.VALUE | OBS.STATUS | OBS.COMMENT | SERIES KEY | TITLE | FREQUENCY | FREQUENCY (DESC.) | REFERENCE AREA | ... | MFI INTEREST RATE DATA TYPE | MFI INTEREST RATE DATA TYPE (DESC.) | AMOUNT CATEGORY | AMOUNT CATEGORY (DESC.) | BS COUNTERPART SECTOR | BS COUNTERPART SECTOR (DESC.) | CURRENCY OF TRANSACTION | CURRENCY OF TRANSACTION (DESC.) | IR BUSINESS COVERAGE | IR BUSINESS COVERAGE (DESC.) | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2003-01-31 | Jan 2003 | 4.65 | A | NaN | MIR.M.AT.B.A2I.AM.R.A.2240.EUR.N | Cost of borrowing for corporations - Austria | M | Monthly | AT | ... | R | Annualised agreed rate (AAR) / Narrowly define... | A | Total (A) | 2240 | Non-Financial corporations (S.11) (2240) | EUR | Euro (EUR) | N | New business (N) |

| 1 | 2003-02-28 | Feb 2003 | 4.60 | A | NaN | MIR.M.AT.B.A2I.AM.R.A.2240.EUR.N | Cost of borrowing for corporations - Austria | M | Monthly | AT | ... | R | Annualised agreed rate (AAR) / Narrowly define... | A | Total (A) | 2240 | Non-Financial corporations (S.11) (2240) | EUR | Euro (EUR) | N | New business (N) |

| 2 | 2003-03-31 | Mar 2003 | 4.51 | A | NaN | MIR.M.AT.B.A2I.AM.R.A.2240.EUR.N | Cost of borrowing for corporations - Austria | M | Monthly | AT | ... | R | Annualised agreed rate (AAR) / Narrowly define... | A | Total (A) | 2240 | Non-Financial corporations (S.11) (2240) | EUR | Euro (EUR) | N | New business (N) |

| 3 | 2003-04-30 | Apr 2003 | 4.22 | A | NaN | MIR.M.AT.B.A2I.AM.R.A.2240.EUR.N | Cost of borrowing for corporations - Austria | M | Monthly | AT | ... | R | Annualised agreed rate (AAR) / Narrowly define... | A | Total (A) | 2240 | Non-Financial corporations (S.11) (2240) | EUR | Euro (EUR) | N | New business (N) |

| 4 | 2003-05-31 | May 2003 | 4.17 | A | NaN | MIR.M.AT.B.A2I.AM.R.A.2240.EUR.N | Cost of borrowing for corporations - Austria | M | Monthly | AT | ... | R | Annualised agreed rate (AAR) / Narrowly define... | A | Total (A) | 2240 | Non-Financial corporations (S.11) (2240) | EUR | Euro (EUR) | N | New business (N) |

5 rows × 27 columns

You can see that there are lots of variables (columns). The ones we wish to keep are DATE, TIME PERIOD, OBS.VALUE and REFERENCE AREA (DESC.).

int_data = int_data[['DATE', 'TIME PERIOD', 'OBS.VALUE', 'REFERENCE AREA (DESC.)']]

int_data.head()

| DATE | TIME PERIOD | OBS.VALUE | REFERENCE AREA (DESC.) | |

|---|---|---|---|---|

| 0 | 2003-01-31 | Jan 2003 | 4.65 | Austria (AT) |

| 1 | 2003-02-28 | Feb 2003 | 4.60 | Austria (AT) |

| 2 | 2003-03-31 | Mar 2003 | 4.51 | Austria (AT) |

| 3 | 2003-04-30 | Apr 2003 | 4.22 | Austria (AT) |

| 4 | 2003-05-31 | May 2003 | 4.17 | Austria (AT) |

These are monthly data. before we shall merge these with the annual inflation data we will aggregate the monthly interest rates to annual values. The way we do this here is by averaging the 12 monthly rates in each year to an average rate. To achieve this we need a column which tells us what year an observation comes from. We can draw that information either from DATE or from TIME PERIOD. Let's check the data formats first.

int_data.info()

<class 'pandas.core.frame.DataFrame'> RangeIndex: 4807 entries, 0 to 4806 Data columns (total 4 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 DATE 4807 non-null object 1 TIME PERIOD 4807 non-null object 2 OBS.VALUE 4807 non-null float64 3 REFERENCE AREA (DESC.) 4807 non-null object dtypes: float64(1), object(3) memory usage: 150.3+ KB

DATE is an object, so python does not recognise it as a date. Let's change that by converting the DATE columns to datetime format.

int_data["DATE"] = pd.to_datetime(int_data["DATE"] )

int_data.info()

<class 'pandas.core.frame.DataFrame'> RangeIndex: 4807 entries, 0 to 4806 Data columns (total 4 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 DATE 4807 non-null datetime64[ns] 1 TIME PERIOD 4807 non-null object 2 OBS.VALUE 4807 non-null float64 3 REFERENCE AREA (DESC.) 4807 non-null object dtypes: datetime64[ns](1), float64(1), object(2) memory usage: 150.3+ KB

Converting the DATE column to datetime format makes the extraction of the year format easy. Of course, this is unlikely to be a task you will do daily and hence it is likely that you will have to look up hpw to do that whenever you need it.

How to find the right code?¶

Task - Extract year from datetime variable¶

You have date information (in particular set to the last day of every month) in the DATE column of your dataframe (int_data). You wish to create a new variable (called YEAR) that only contains the year information for each row.

Internet Search¶

One way to obtain help is to go to your favorite internet search engine and search for something like "python extract year from datetime format". This is likely to guide you to a number of useful pages with strategies to achieve this task. There will be different ways to achieve this. One example is this page which offers (amongst others possibilities) the following example:

# Using pandas.Series.dt.year()

# the following code has been uncommented as it would create an error

# df['Year'] = df['InsertedDate'].dt.year

# print("Get the year from the datetime column:\n", df)

This then needs to be adjusted to work for the object and variable names in our dataset:

int_data['YEAR'] = int_data['DATE'].dt.year

This has no created a new variable YEAR that contains the year only. Look at int_data to confirm that this has worked.

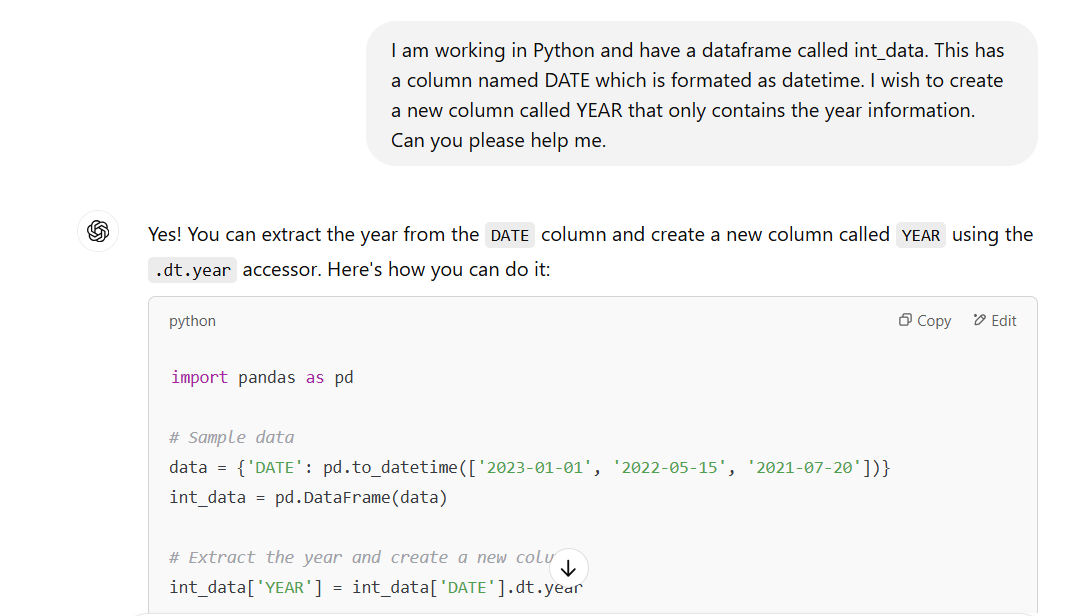

Using ChatGPT or other LLMs¶

Another way to get help with such a problem is to use ChatGPT (or another LLM) to help you with the issue on hand. Below you can see my interaction with ChatGPT (here version 4o). As you can see, you will have to setup the problem very clearly.

As you can see, it basically offers the same solution as the solution we identified through a search process.

¶

Now we are in a position to aggregate the interest rate data by year.

int_data_annual = int_data.groupby(['REFERENCE AREA (DESC.)','YEAR'], as_index=False).mean()

# try to run this without ", as_index=False" to see what this option achieves

Anticipating that we will want to merge the data with those in inf_data we will have to work the country variable. In inf_data, we have data from Austria identified as follows

| Country Code | Country |

|---|---|

| AUT | Austria |

In int_data, however, observations are identified by

| REFERENCE AREA (DESC.) |

|---|

| Austria (AT) |

If we want to merge the data, we need Python to enable Python to match rows from Austria with each other, meaning that both data objects should have a column with identical identifiers for Austria. We will do that in two steps. First we split the REFERENCE AREA (DESC.) variable in int_data into two columns, one for the name and one for the two letter code. Once we have done that we should be able to match on the basis of the country name. However, some countries and terretories have slightly different spellings in different organisations. It is therefore best to match on the unique country identifiers. Here, the inf_data file uses three letter codes (e.g. "AUT") and the int_data file uses two letter codes (e.g. "AT"). We will therefore also add a three letter country code to int_data.

How to find the right code?¶

Task - Split cells and translate country codes¶

Take the REFERENCE AREA (DESC.) variable and turn it into three variables as follows

| REFERENCE AREA (DESC.) |

|---|

| Austria (AT) |

to

| Country | Code2 | Code3 |

|---|---|---|

| Austria | AT | AUT |

This is basically two steps:

- Split "Austria (AT)" to "Austria" and "AT".

- Add another variable that uses the info in "AT" to produce the respective three letter code "AUT".

For the 2nd step you will either need to use the information provided by IBAN as a lookup table or see whether someone has written a convenient function to achieve this task.

Internet Serach¶

You could use internet search to tackle this issue, but we recommend, on this occasion to ask an AI engine for a proposed solution.

Ask ChatGPT¶

As always, if you are asking an AI engine for help you have to think carefully about the instructions and the necessary information you have to pass to the AI engine. Here we used the following instructions:

"I have a panda dataframe in Python (called int_data), which has a column called COUNTRY. Values in that column are formatted as a combination of the country name and a two-letter country code, e.g. "Austria (AT)". I wish to split this into two columns, one with the country name and one with the two letter country code. Then I wish to add a third column that has the respective three letter country code. Can you help me with this?"

Here is a link to the Chat from which you can see the suggested solution.

¶

Here we implement the code we were suggested by ChatGPT.

int_data_annual[['Country', 'Country Code2']] = int_data_annual['REFERENCE AREA (DESC.)'].str.extract(r'(.+)\s\((\w{2})\)')

int_data_annual.head(2)

| REFERENCE AREA (DESC.) | YEAR | OBS.VALUE | Country | Country Code2 | |

|---|---|---|---|---|---|

| 0 | Austria (AT) | 2003 | 4.095000 | Austria | AT |

| 1 | Austria (AT) | 2004 | 3.605833 | Austria | AT |

You can see that indeed the first step has been achieved exactly as we anticipated. You do not have to understand all the details of the above line. The str.extract() function takes the string found in the REFERENCE AREA (DESC.) column and splits it into two new columns Country and Country Code2 (discarding the parentheses). The term r'(.+)\s\((\w{2})\)' is called a regular expression. It basically does what we asked the code to do. These regular expressions are very complicated which is why asking ChatGPT and testing the code is the way to go. You can check the resulting country codes from the following line:

int_data_annual['Country Code2'].unique()

array(['AT', 'BE', 'HR', 'CY', 'EE', 'U2', 'FI', 'FR', 'DE', 'GR', 'IE',

'IT', 'LV', 'LT', 'LU', 'MT', 'NL', 'PT', 'SK', 'SI', 'ES'],

dtype=object)

For the 2nd step we were recommended to use a package/library called pycountry. Ensure you install this on your computer if you do not have this package. This is done with the pip install pycountry command from the Terminal. If you need to be reminded how to deal with libraries you could consult this page on ECLR.

import pycountry

def get_alpha3_code(alpha2):

try:

return pycountry.countries.get(alpha_2=alpha2).alpha_3

except AttributeError:

return None # Return None if not found

# Apply function to get the three-letter code

int_data_annual['Country Code'] = int_data_annual['Country Code2'].apply(get_alpha3_code)

int_data_annual.head(2)

| REFERENCE AREA (DESC.) | YEAR | OBS.VALUE | Country | Country Code2 | Country Code | |

|---|---|---|---|---|---|---|

| 0 | Austria (AT) | 2003 | 4.095000 | Austria | AT | AUT |

| 1 | Austria (AT) | 2004 | 3.605833 | Austria | AT | AUT |

The code did exactly what we wanted. We now have two potential column on which we could match data from inf_data and int_data, namely the columns Country and Country Code. In fact, let's drop the other two columns identifying the country.

int_data_annual = int_data_annual.drop(['REFERENCE AREA (DESC.)','Country Code2'], axis = 1)

int_data_annual.head(2)

| YEAR | OBS.VALUE | Country | Country Code | |

|---|---|---|---|---|

| 0 | 2003 | 4.095000 | Austria | AUT |

| 1 | 2004 | 3.605833 | Austria | AUT |

Organise dataframes¶

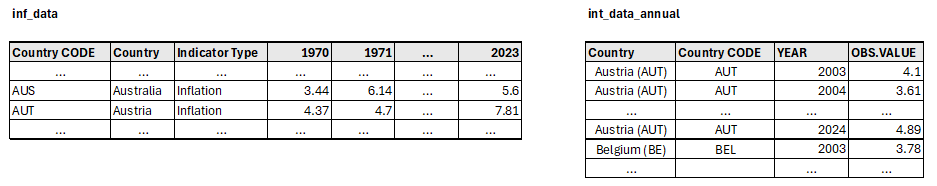

It is very common that you may want to reorganise spreadsheets as running regressions may require a different data structure than graphing data. It is for this reason that you have to be prepared to re-organise your data alone. On this occasion we also need to reorganise data such that we can merge the two datasets. Here is a schematic view of how the data look like.

inf_data has a so-called wide structure, int_data_annual has a long data structure. Long structures are typically easier to work with. In order to merge these we will have to add another variable to int_data_annual namely a variable called Indicator Type which tells us that the data in the int_data dataframe are interest rates. This is because we are aiming at merging the data into the following type of structure.

| Country | Country Code | YEAR | Indicator Type | OBS.VALUE |

|---|---|---|---|---|

| Austria | AUT | 2003 | Inflation | 1.356 |

| Austria | AUT | 2003 | Interest Rate | 4.095 |

| Austria | AUT | 2004 | Inflation | 2.061 |

| ... | ... | ... | ... | ... |

let us start by adding this column.

int_data_annual['Indicator Type'] = "Interest rate"

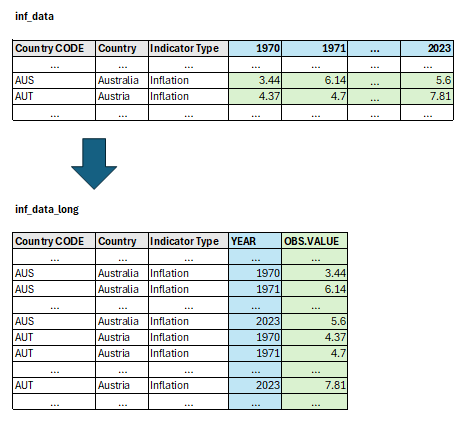

Now we need to translate the inf_data table into a long format where each row represents one country-year. The following image indicates what we wish to achieve.

Note how the colour coding indicates how the information is to be translated. Translating data from wide to long format is done using the melt function. This type of operation is important and is frequently required.

# Determine which variables should stay (grey, id_vars in melt) and which should become a variable (blue, value_vars in melt)

indic_vars = inf_data.columns[:3] # picks the first 3 (0 to 2) variable names

vars_to_year = inf_data.columns[3:] # picks the variable names that are years (from 4th variable ot end)

# Convert wide to long format

inf_data_long = inf_data.melt(id_vars = indic_vars, value_vars = vars_to_year,

var_name='YEAR', value_name='OBS.VALUE')

# Convert 'Year' column to integer

inf_data_long['YEAR'] = inf_data_long['YEAR'].astype(int)

Finally we are in a position to merge the data into a new dataframe, comb_data_long. As both datasets are in long format and each country-year-variable has a different row, all we need to do is to attach the two spreadsheets to each other. Let's just ensure that the columns have the same order (look at inf_data_long.columns and int_data_annual.columns ).

comb_data_long = pd.concat([inf_data_long,int_data_annual])

This file now has the desired structure:

| Country | Country Code | YEAR | Indicator Type | OBS.VALUE |

|---|---|---|---|---|

| Austria | AUT | 2003 | Inflation | 1.356 |

| Austria | AUT | 2003 | Interest Rate | 4.095 |

| Austria | AUT | 2004 | Inflation | 2.061 |

| ... | ... | ... | ... | ... |

although the ordering will be different. Also note that the inflation data came from the OECD database and has information for more than 200 countries and more than 50 years. The interest rate data, however, come from the European Central Bank and only contains data from 2003 onwards and only for the 20 countries in the Euro area (plus for the entire Euro area). Let us reduce the dataset to the countries we have available from the ECB and the Years 2003 to 2023 (the last year for which we had inflation data at the time of downloading). We begin by defining sel_countries and sel_years variables which contain the countries and years respectively we wish to keep.

sel_countries = int_data_annual['Country'].unique()

sel_countries = np.delete(sel_countries,5) # remove element indexed 5 as that is "Euro area (changing composition)"

sel_years = range(2003,2024) # define the range of years to use

comb_data_long = comb_data_long.query('Country.isin(@sel_countries)') # selects all rows where the Country variable is in sel_countries

comb_data_long = comb_data_long.query('YEAR.isin(@sel_years)')

comb_data_long.info()

<class 'pandas.core.frame.DataFrame'> Int64Index: 759 entries, 6774 to 401 Data columns (total 5 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Country Code 759 non-null object 1 Country 759 non-null object 2 Indicator Type 759 non-null object 3 YEAR 759 non-null int64 4 OBS.VALUE 759 non-null float64 dtypes: float64(1), int64(1), object(3) memory usage: 35.6+ KB

Now we can do exciting things with the data!!! Let's do plot time series of inflation and interest rates across countries in the Euro area. Afterwards we will estimate a regression model.

Plotting data¶

Let's create plots with inflation rates across the EURO area and afterwards with interest rates across the EURO area. Before calling on the ggplot function (from the lets_plot library, see here for an introduction), we create helper variables (sel_inf and sel_int that help us select the inflation and interest rate data respectively).

sel_inf = comb_data_long['Indicator Type'] == "Inflation"

(

ggplot(comb_data_long[sel_inf], aes(x = 'YEAR', y = 'OBS.VALUE', color = 'Country')) +

geom_line() +

labs(title = "Inflation rates in the EURO area")

)

By and large inflation rates seem harmonised across the EURO area. And now for the interest rates.

sel_int = comb_data_long['Indicator Type'] == "Interest rate"

(

ggplot(comb_data_long[sel_int], aes(x = 'YEAR', y = 'OBS.VALUE', color = 'Country')) +

geom_line() +

labs(title = "Interst rates in the EURO area")

)

There is much more variation in interest rates between the countries of the EURO area.

Another interesting visualisation to look at would be line graphs in which, for each country, we can see the development of the the interest rate and inflation rate in the same graph. This will give us an idea of the real interest rate (nominal interest rate - inflation).

(

ggplot(comb_data_long, aes(x = 'YEAR', y = 'OBS.VALUE', color = 'Indicator Type')) +

geom_line() +

facet_wrap('Country') +

labs(title = "Interst rate and inflation rates across the EURO area")

)

You can see that in most of the larger countries in the EURO area the interest rate ends to be larger than the inflation rate, implying a positive real interest rate. However, during the covid period, real interest rates were significantly negative. You can also see that our dataset is not complete, a number of inflation data are missing.

Estimating a regression¶

When you estimate a regression it is convenient to reorganise your data from long to wide, meaning that the different variables, here inflation and interest rates, are shown as different columns and not as different observations. The Python function to turn a long data frame to a wide one is the pivot function.

index_vars = ['Country Code', 'Country', 'YEAR'] # picks the columns to keep

col_vars = 'Indicator Type' # picks the column which is to be turned into new columns (one for each value)

value_vars = 'OBS.VALUE' # picks the column from which to pick the values for the columns

comb_data_wide = comb_data_long.pivot(index = index_vars, columns = col_vars, values = value_vars).reset_index()

Try the above command without the .reset_index(). You will see that it will have combined the index_vars into one variable which is not useful. comb_data_wide now has seperate columns for inflation and interest rates (although different countries still appear in different rows, but that is ok). Now we are in a position to run a regression, say, using the Austrian

It is of course important to understand that this is just a descriptive tool and no causal interpretation should be attached to the results. You can learn more about implementing regression in Python from this page on ECLR. We need the statsmodels package.

import statsmodels.api as sm

Should you get the error message "ModuleNotFoundError: No module named 'statsmodels'" then you will first have to install the package. Go to your Terminal and use the command "pip install statsmodels".

The following code selects the data to use, defines y and x variables and estimates an OLS regression.

sel_country = comb_data_wide['Country Code'] == "AUT" # indicator variable for obs from Austria

temp_data = comb_data_wide[sel_country] # select the observations fom Austria

y = temp_data['Interest rate'] # define left hand side variable, explained

x = temp_data['Inflation'] # define right hand side variable, explanatory

mod = sm.OLS(y,sm.add_constant(x)) # define model and add constant

res = mod.fit() # estimate the model

print(res.summary()) # show regression output

OLS Regression Results

==============================================================================

Dep. Variable: Interest rate R-squared: 0.035

Model: OLS Adj. R-squared: -0.016

Method: Least Squares F-statistic: 0.6943

Date: Mon, 03 Feb 2025 Prob (F-statistic): 0.415

Time: 08:17:00 Log-Likelihood: -33.965

No. Observations: 21 AIC: 71.93

Df Residuals: 19 BIC: 74.02

Df Model: 1

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 2.4147 0.450 5.368 0.000 1.473 3.356

Inflation 0.1180 0.142 0.833 0.415 -0.178 0.414

==============================================================================

Omnibus: 2.534 Durbin-Watson: 0.499

Prob(Omnibus): 0.282 Jarque-Bera (JB): 2.088

Skew: 0.675 Prob(JB): 0.352

Kurtosis: 2.250 Cond. No. 5.43

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

You can see that there is no statistically significant relation between interest rate and inflation in Austria over the 2003 to 2023 sample period.

Summary¶

In this workthrough you learned how to

- merge two datafiles

- prepare datafiles for merging

- change data formats between wide and long

- adjust data formats to suit the analysis.

This walkthrough is part of the ECLR page.